Barks Blog

Are ‘Free-Shaped’ Dogs Better Problem Solvers?

A look at the criticisms of lure-reward training by Carmen LeBlanc MS ACAAB CPDT. First published in BARKS from the Guild, April 2014, pp. 12-18

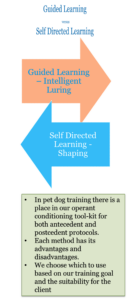

Most professional dog trainers have heard about criticisms of lure-reward training in recent years. These criticisms have been made along with enthusiastic claims about the superiority of free-shaped (unprompted, trial-and-error) clicker training.

Those of us who use free-shaping understand the enthusiasm. It is challenging and exciting to communicate with dogs in such a free-form way, developing a new behavior one small increment at a time.

As technicians of scientific procedures, however, it is important that we take a collective, critical look at what is being said. Are these criticisms and claims supported at all by empirical studies? Do they represent the cutting edge of what is happening in dog training, or is a piece of history repeating itself? Has some objectivity been lost as one reward-based operant technology is criticized and another is lauded?

It would be a shame to allow misunderstandings to drive a wedge between our fledgling ranks, as we all work to convince our clients of the powers of positive reinforcement. Fortunately, humane, no-force trainers share much common ground.

Following are some key criticisms and claims, and some explanation of the history and uses, the strengths and pitfalls of these training technologies, based on scientific texts and literature in applied behavior analysis and learning theory.

“Luring isn’t training. It implies doubt in the laws of learning.”

A lure is one kind of orienting prompt (sticks and balls are others) that is used to guide a dog into a desired behavior (Lindsay, 2000). These prompts are antecedents, stimuli that come before behavior. Since the three-term contingency of A-B-C (antecedent-behavior-consequence) is the basic unit of analysis in learning theory, we can’t just dismiss A.

Like other prompts — including verbal ones like high-pitched noises, gestural ones like clapping or crouching, environmental ones like using a wall to get a straight heel position — a lure is used to get the desired behavior during the early part of training and then is gradually eliminated after the behavior has been strengthened through reinforcement. Since fading is the technology for eliminating the prompt, prompting and fading go hand in hand.

Prompting-fading and shaping have distinct purposes. Fading is about stimulus control, the A part of A-B-C. It typically begins with a behavior that is already in the animal’s repertoire and gradually changes what controls (or evokes) it. So a ‘sit’ is first evoked with a lure, and over several repetitions the lure is faded out as a gestural or verbal cue is faded in. Whether we are shapers or prompters or both, we all use fading when installing verbal cues.

In contrast, shaping is about developing a behavior that is not yet in the animal’s repertoire, the B part of A-B-C. Shaping begins with an approximation of the final desired behavior and gradually develops it into the final one. In short, fading changes the controlling stimuli of an existing behavior whereas shaping develops new behavior. Both techniques rely on the C part of A-B-C, reinforcement, to strengthen or maintain behavior.

In the real world, though, the distinction isn’t drawn so neatly, since the two procedures are often used together. We use prompts during shaping (e.g. to teach rollover or spin) and some successive approximations during luring (e.g. getting a full down). And in both lure-reward training and shaping, clickers can be used.

Also known as ‘errorless learning,’ prompting-fading has been around since Skinner’s, Terrace’s and others’ work in the 1930s to 1960s, which showed that, with sufficient care, a discrimination could be learned with few or no errors (Skinner, 1938; Schlosberg & Solomon, 1943; Terrace, 1963, 1966). Skinner and Terrace both believed that errors resulting from ‘trial and error’ discrimination training (i.e. shaping) were aversive and harmful. With children and animals, shaping often provoked problem behaviors when subjects made mistakes and lost reinforcement. Aggression, self-injury, tantrums, frustration, apathy and escape behaviors were all cited as direct consequences of shaping (Touchette & Howard, 1984).

So errorless techniques were developed to obtain behaviors more efficiently, with a steady rate of reinforcement, and less wear and tear on the learner. At the time, this caused quite a paradigm shift since it was widely assumed that errors were necessary for learning. A considerable body of evidence has since accumulated that errorless techniques are successful with human and nonhuman animals (Schroeder, 1997).

The notion that ‘prompting is not training’ is somewhat understandable since we know that consequences, not antecedents, determine behavior. We all strive to focus our clients on consequences rather than antecedents. Although antecedents do not cause behavior to occur, they increase the likelihood of behavior if their presence has been associated with past reinforcement of the behavior. In short, B is a function of C in the presence of A.

So a dog learns that in the presence of antecedent X (mom), sits are noticed and reinforced, but in the presence of antecedent Y (child), sits are not reinforced. Sits become much more likely in front of mom, who is the discriminative stimulus (SD) for reinforcement for sits.

“Luring is coercive.”

Interestingly, this same criticism was leveled at all operant conditioning during Skinner’s career. Whether used in fading or shaping, reinforcement was perceived as manipulative. The power that reinforcement demonstrated to change behavior — including humans’ behavior — made people uncomfortable. It ran counter to strong beliefs about human free will and superiority over animals. Today’s critics have focused on one operant technique, using food as an orienting prompt, but perhaps a part of history is replaying today.

Furthermore, it seems inconsistent for luring critics to acknowledge the truth that reinforcement is the power of operant conditioning, yet say that antecedent lures are so powerful they render animals helpless. The fact is, positive reinforcement (R+) — after the behavior not before — is so powerful that any operant technology that uses it can produce self-injurious behavior.

Rasey & Iverson (1993) shaped rats, using food reinforcers, to lean so far over a ledge that they fell off (they were saved by a net). Schaefer (1970) shaped self-injurious head banging in Rhesus monkeys. Learning textbooks usually supply several examples of parents inadvertently shaping obnoxious, even self-injurious behavior in their children by ignoring (and subsequently paying attention to) increasing levels of destructive behavior like screaming or head-banging.

Many of us have seen a cartoon in the Association of Professional Dog Trainers (APDT) Chronicle of a hapless dog appearing before a judge, protesting “But I was lured!” The dog could just as truthfully say, “But I was shaped!” In reality, neither luring nor shaping is ‘coercive,’ but both methods powerfully determine behavior through reinforcement. Murray Sidman’s (1989) definition may help alleviate confusion about what learning theorists consider ‘coercive’: “To be coerced is to be compelled under duress or threat to do something ‘against our will…’. Control by positive reinforcement is non-coercive; coercion enters the picture when our actions are controlled by negative reinforcement or punishment” (pp. 31–32).

Perhaps a more accurate word to capture some trainers’ concerns about lures is ‘intrusive.’ This term is used in textbooks to describe stronger prompts like physical guidance or force. Because of a treat’s salience, we could construe it as intrusive. Of course, the intrusiveness of physical force is light-years away from the intrusiveness of a hot dog morsel. Both are quite salient to the animal, but force is aversive and food is deliriously enticing. It’s easy to make food lures less salient or intrusive by lowering their value. As Karen Overall points out (1997), dog biscuits are generally not sufficient motivation, but some foods are so desirable that the dog is too stimulated or distracted by them. Something between these two extremes is preferred.

“Luring produces passive dogs. Shaping produces ‘creative’ ones.”

To unravel these claims, we first have to ground them in precise definitions of observable behaviors. What does ‘creative’ mean? What behaviors constitute creativity? Behavioral (operational) definitions are key to the scientific process, because they recast fuzzy concepts or labels into specific behaviors that two or more people can agree on, observe and measure.

A term commonly used in the literature that encompasses the concepts of creativity and problem-solving is response generalization or response variation. Variation can be measured along dimensions such as speed, topography, latency, intensity, position and duration. It means that a variety of behaviors (or variations of one behavior) occur in the presence of a stimulus or situation. One reason they occur is that they are all functionally equivalent; they achieve the same consequence. A dog with a food toy, for example, quickly learns that pawing, nosing, licking, biting are all helpful in getting some food.

So does shaping produce more response variability than prompting? This question hasn’t been directly studied in the literature, so we have to glean our answers indirectly. First, two caveats are in order:

- We should remember that all animals are adapted problem solvers. They are biological learning machines that interact with the environment and have their behavior shaped (selected) by its consequences (Pierce & Epling, 1999). In fact, Skinner said that evolution and operant learning operate by the same processes: trying or producing new things (variation) and discarding what doesn’t work (selection) (Skinner, 1990). No operant technique can undo what thousands of years of evolution have produced.

- Behavior is naturally variable. But in the absence of explicit reinforcement for variability, responding becomes more stereotyped (repetitive) and less variable with continued operant conditioning (Domjan, 2003). By definition, shaping narrows the range of behaviors as we select the behaviors we want and extinguish the ones we do not.

Two key procedures that involve shaping, however, have resulted in increased response variation: 1) extinction and 2) explicitly reinforcing variation or novelty, as Pryor did with a porpoise (Pryor, Haag, & O’Reilly, 1969) and as she has taught with her well known ‘101 Things to Do with a Box’ game (Pryor, 1999).

In the first procedure, pure extinction (when a behavior that was previously reinforced is no longer reinforced), behavior can increase or become more variable in frequency, duration, topography, and intensity. In addition, extinction bursts can briefly produce novel behaviors (ones that have not occurred in that situation) (Miltenberger, 2004). For example, when a child’s parents no longer reinforce the child’s nighttime crying, he cries louder and longer (increased intensity and duration) and screams and hits the pillow (novel behaviors).

However, the evidence of increased variability with partial extinction, when reinforcement is not reduced to zero (as is the case with shaping), is mixed. Some studies report increased variability, but others show small or no effects (Grunow & Neuringer, 2002).

Another route to increased variability is when variability is made the explicit criterion for reinforcement, as in Pryor’s study and her box game. Much research since then has shown that repeating and varying behaviors are in part operant skills under the control of reinforcing consequences (e.g. Neuringer, Deiss & Olson, 2000; Stokes, Mechner, & Balsam, 1999). In other words, if stereotypy is reinforced, it will increase, and if variability is reinforced, that will increase.

One study with preschoolers showed that the frequency of children’s creative play with building blocks could be increased through social reinforcement (praise) from the teacher (Goetz & Baer, 1973).

Variability as an operant has considerable applied potential when attempting to train an animal or person to solve problems. A study with rats as a model (Arnesen, 2000) showed that prior reinforcements of response variations increased the rats’ exploration of novel objects and discovery of reinforcers (problem solving), even in a novel environment.

Nevertheless, researchers who study creativity debate whether reinforcement facilitates or impedes it. There is some support for the facilitation hypotheses, but also a body of literature that indicates that reinforcement interferes with creativity (Neuringer, 2004). If the latter is true, interference would occur with both lure-reward training and shaping, since both use reinforcement.

One way to look at the issue is to consider that some problems require flexibility and others require rigidity. Variability is reinforced when writing a poem. But rote repetition is reinforced when solving long division problems. Similarly, in assistance or scent work, a dog’s variability on some tasks would more likely be reinforced whereas in a pet owner’s home a more rigid obedience might be.

Turning back to prompting-fading techniques, what does the research say about their limitations? Again, the evidence is mixed. Terrace’s 1960s studies and many since then (e.g. Dorry & Zeaman, 1973; Fields, 1980; Robinson & Storm, 1978) have directly compared errorless techniques with trial-and-error and have shown errorless to be superior with developmentally disabled children and animals. These researchers have concluded that animals and people learn complex discriminations more readily using errorless training (prompting-fading) than trial-and-error (shaping) (Klein, 1987).

But others, citing different studies, have concluded the opposite. They say that although trial-and-error has more adverse side effects compared to errorless training, it also results in greater flexibility when what is learned has to be modified later. These studies have shown that fading narrows attention to specific features of the prompt stimulus, which can impede learning in situations where the correct responses are changed or controlled by multiple cues (Marsh & Johnson, 1968; Gollin & Savoy, 1968; Jones & Eayrs, 1992).

One study these researchers cite taught one group of pigeons an errorless discrimination and another group a trail-and-error discrimination. The errorless group had extreme difficulty changing their behavior when the discrimination was reversed compared to the trial-and-error group, which handled the reversal well (Marsh & Johnson, 1968).

Another issue with fading cited in the literature is that research has not yet determined exactly how to produce a successful transfer from prompted to unprompted responding. There are six classes of prompts and three types of fading, and which of these combinations is optimal, for which participants or behaviors, is unknown.

The same is true for shaping, however. Research hasn’t determined exactly how many successive steps, what size of steps, or how many trials at each step are optimal. In fading, prompt dependence can occur if prompts are offered too long, and premature removal of prompts can lead to persistent errors. Similarly, in shaping, the learner can get stuck on one approximation if it is reinforced too long, or lose the approximation if it is reinforced too briefly. So both methods are presented in textbooks as something of an art, involving planned and impromptu judgment calls depending on the trainer, the learner and the situation (Martin & Pear, 2003).

Some researchers believe that errorless procedures are best suited for the learning of basic facts — like arithmetic and spelling — things that won’t change. In contrast, since trial-and-error learning may produce more flexibility in responding, it may be more appropriate in problem solving situations or in those in which the contingencies could change (Pierce & Epling, 1999).

Presuming these limitations with errorless methods do exist, dog trainers should nevertheless resist casting operant techniques in categorical good-bad roles, and accept that fading and shaping each has its distinct advantages, pitfalls, and unique areas of most effective application.

If shaping does produce greater flexibility, and fading does produce more fixed learning, we should not assume that one outcome is a virtue and the other a vice. Many pet owners and handlers of working dogs would likely prefer strict compliance and not an ounce of variability. On the other hand, many trainers probably prefer more variability.

There does not seem to be a preponderance of evidence yet to decide the issue. That can be frustrating, because it leaves us without quick, clear-cut answers. Instead, we have to think like scientists, weigh the evidence, and keep an open yet skeptical mind. This issue challenges all trainers to continue learning and sharpening our skills in practicing and teaching all noncoercive operant techniques, and to keep our training as flexible and individualized as possible.

For more information about force-free training, coaching clients, counter-conditioning fearful dogs and so much more, come join the Pet Professional Guild’s Force-Free Summit in sunny Tampa, Florida-November 11-13, 2015.

References

Arnesen, E. M. (2000). Reinforcement of Object Manipulation Increases Discovery. Unpublished Bachelor’s Thesis, Reed College.

Domjan, M. (2003). The Principles of Learning and Behavior. Belmont, CA: Wadsworth/Thomson Learning.

Dorry, G. W. & Zeaman, D. (1973). The Use of a Fading Technique in Paired-Associate Teaching of a Reading Vocabulary with Retardates. Mental Retardation, 11, 3–6.

Fields, L. (1980). Enhanced Learning of New Discriminations after Stimulus Fading. Bulletin of the Psychonomic Society, 15, 327–330.

Goetz, E. & Baer, D. (1973). Social Control of Form Diversity and the Emergence of New Forms in Children’s Blockbuilding. Journal of Applied Behavior Analysis, 6, 209–217.

Gollin, E. S. & Savoy, P. (1969). Fading Procedures and Conditional Discrimination in Children. Journal of the Experimental Analysis of Behavior, 11, 443–451.

Grunow, A. & Neuringer, A. (2002). Learning to Vary and Varying to Learn. Psychonomic Bulletin & Review, 9, 250–258.

Jones, R. & Eayrs, C. B. (1992). The Use of Errorless Learning Procedures in Teaching People with a Learning Disability: A Critical Review. Mental Handicap Research, 5, 204–210.

Klein, S. B. (1987). Learning: Principles and Applications. New York: McGraw Hill Book Company.

Lindsay, S. R. (2000). Handbook of Applied Dog Behavior and Training: Adaptation and learning, Vol. 1. Ames, IO: Iowa State Press.

Marsh, G. & Johnson, R. (1968). Discrimination Reversal Following Learning without ‘Errors.’ Psychonomic Science, 10, 261–262.

Martin, G. & Pear, J. (2003). Behavior Modification: What It Is and How To Do It. Upper Saddle River, New Jersey: Prentice-Hall.

Miltenberger, R. G. (2004). Behavior Modification: Principles and Procedures. Belmont, CA: Wadsworth/Thompson Learning.

Neuringer, A. (2004). Reinforced Variability in Animals and People. American Psychologist, 59, 891–906.

Neuringer, A., Deiss, C. & Olson, G. (2000). Reinforced Variability and Operant Learning. Journal of Experimental Psychology, 26, 98–111.

Overall, K. (1997). Clinical Behavioral Medicine for Small Animals. St. Louis, MO: Mosby, Inc.

Pierce, W. D. & Epling, W. F. (1999). Behavior Analysis and Learning. Upper Saddle River, New Jersey: Prentice-Hall, Inc.

Pryor, K. (1999). Don’t Shoot The Dog! The New Art of Teaching and Training. New York: Bantam Books.

Pryor, K., Haag, R. & O’Reilly, J. (1969). The Creative Porpoise: Training for Novel Behavior. Journal of the Experimental Analysis of Behavior, 12, 653–661.

Rasey, H. W. (1993). An Experimental Acquisition of Maladaptive Behavior by Shaping. Journal of Behavior Therapy & Experimental Psychiatry, 24, 34–43.

Robinson, P. W. & Storm, R. H. (1978). Effects of Error and Errorless Discrimination Acquisition on Reversal Learning. Journal of the Experimental Analysis of Behavior, 29, 517–525.

Schaefer, H. H. (1970). Self-Injurious Behavior: Shaping ‘Head Banging’ in Monkeys. Journal of Applied Behavior Analysis, 3, 111–116.

Schlosberg, H. & Solomon, R. L. (1943). Latency of Response in a Choice Discrimination. Journal of the Experimental Psychology, 33, 22–39.

Schroeder, S. (1997). Selective Eye Fixations During Transfer of Discriminative Stimulus Control. In D. M. Baer & E. M. Pinkston (Eds.), Environment and Behavior. Boulder, CO: Westview Press.

Sidman, M. (1989). Coercion and Its Fallout. Boston, Massachusetts; Authors Cooperative.

Skinner, B. F. (1990). Can Psychology Be a Science of Mind? American Psychologist, 45, 1206–1210.

Stokes, P. D., Mechner, F. & Balsam, P. D. (1999). Effects of Different Acquisition Procedures on Response Variability. Animal Learning & Behavior, 27, 28–41.

Terrace, H. S. (1963). Errorless Transfer of a Discrimination across Two Continua. Journal of the Experimental Analysis of Behavior, 6, pp. 223–232.

Terrace, H.S. (1966). Stimulus Control. In W. K. Honig (Ed.), Operant Behavior: Areas of Research and Application. New York: Appleton-Century-Crofts.

Touchette, P. E. & Howard, J. S. (1984). Errorless Learning: Reinforcement Contingencies and Stimulus Control Transfer in Delayed Prompting. Journal of Applied Behavior Analysis, 17, 175–188.