Barks Blog

Herrnstein’s Matching Law and Reinforcement Schedules

Have you heard trainers talking about the matching law? This post covers a bit of its history and the nuts and bolts of what it is about. I am providing this rather technical article because I want something to link to in some other written pieces about how the matching law has affected my own training of my dogs.

In 1961, B.J. Herrnstein published a research paper in which there was an early formulation of what we call the matching law (Herrnstein, 1961). In plain English, the matching law says that we (animals including humans) perform behaviors in a ratio that matches the ratio of available reinforcement for those behaviors. For instance, in the most simplified example, if Behavior 1 is reinforced twice as often as Behavior 2 (but with the same amount per payoff), we will perform Behavior 1 about twice as often as Behavior 2.

The matching law can be seen as a mathematical codification of Thorndike’s Law of Effect:

Of several responses made to the same situation, those which are accompanied or closely followed by satisfaction to the animal will, other things being equal, be more firmly connected with the situation, so that, when it recurs, they will be more likely to recur; those which are accompanied or closely followed by discomfort to the animal will, other things being equal, have their connections with that situation weakened, so that, when it recurs, they will be less likely to occur. The greater the satisfaction or discomfort, the greater the strengthening or weakening of the bond. — (Thorndike, 1911, p. 244)

It gets complex fast, though.

Following is Paul Chance’s simplified version of the basic matching law (Chance, 2003). I’m using Chance’s equation because Herrnstein’s original choice of variables is very confusing. Please keep reading, even if you don’t like math. This is the only formula I’m going to cite.

where B1 and B2 are two different behaviors and r1 and r2 are the corresponding reinforcement schedules for those behaviors.

This is the same thing I said in words above about Behavior 1 and Behavior 2.

Note that this version of the formula deals with only two behaviors. However, the formula is robust enough to extend to multiple behaviors, and also holds true when you choose one behavior to focus on and lump all other behaviors and their reinforcers into terms for “other.” Later, a researcher named William Baum did some fancy math and incorporated some logarithmic terms to account for some commonly seen behavioral quirks (Baum, 1974).

There is something else we need to understand to really “get” the matching law. We need to understand schedules of reinforcement, both ratio and interval schedules. We often use ratio schedules in training our dogs. We don’t use interval schedules as often. They are very common in life in general, though. First, let’s review ratio schedules.

Ratio Schedules

Woodpeckers who are pecking for food are reinforced on a variable ratio schedule: a certain number of pecks will dislodge a bug to eat or uncover some sap.

Most of us are at least a bit familiar with ratio schedules, where reinforcement is based on the number of responses. When training a new behavior, we usually reinforce every iteration of the behavior. Some trainers deliberately thin this ratio later, and only reinforce a certain percentage of the iterations.

There has been a ton of research on schedules and their effects on behavior. The terminology of ratio schedules is, for instance, that if your schedule reinforces exactly every 5th behavior, that’s called Fixed Ratio 5, abbreviated FR5. (This is generally a bad idea in real life. Animals learn the pattern and lose motivation during the “dry spells.”) If the schedule keeps the same ratio but the iterations are randomized, and you reinforce every 5th behavior on average, that’s called a variable ratio. That would be referred to as Variable Ratio 5 or VR5. Introducing that variability makes behaviors more resistant to extinction, but there are many other factors to consider when deciding how often to reinforce. More on that in later posts!

Interval Schedules

In an interval schedule, a certain amount of time must elapse before a certain behavior will result in a reinforcer. The number of performances of the behavior is not counted. Interval schedules have fixed and variable types as well. A fixed interval schedule of 5 minutes would be noted as FI5; a variable interval schedule of 5 minutes (remember, that means that the average time is 5 minutes) would be VI5.

Interval schedules are not the same as duration schedules, which are also time-based. We use duration schedules much more often in animal training. During duration schedules, there is a contingency on the animal’s behavior during the whole time period, such as a stay. In interval schedules, there is no contingency during the “downtime.” The animal just has to show up and perform the behavior after the interval has passed in order to get the reinforcer.

Interval schedules happen in human life, especially with events that are on schedules. Consider baking cookies. You put them in the oven and set a timer for 15 minutes. But you are an experienced baker and you know that baking is not perfectly predictable. This means your schedule is Variable Interval 15, so there’s a chance that the cookies could be ready before or after 15 minutes. So you start performing the behavior of walking over to the oven to check the cookies after 12 or 13 minutes. But only after the cookies are done and you take them out of the oven do you get the positive reinforcement for the behavior chain: you get perfectly baked cookies. And at the beginning of the baking period, you are likely to do something else for a while. You know there is no reinforcement available for visiting the oven at the beginning of the period; the cookies won’t be ready.

Your dogs heed scheduled events, as well. If you feed them routinely at a certain time, you’ll get more and more milling around the kitchen (or staring into it) as that time approaches.

Tigers are ambush predators and are reinforced on a variable interval schedule for some attacks after their patient waiting.

Interval Schedules and the Matching Law: Translating Herrnstein’s Experiment

Let’s apply what we learned about interval schedules to better understand the matching law. Here is an example that is parallel to Herrnstein’s original experiment.

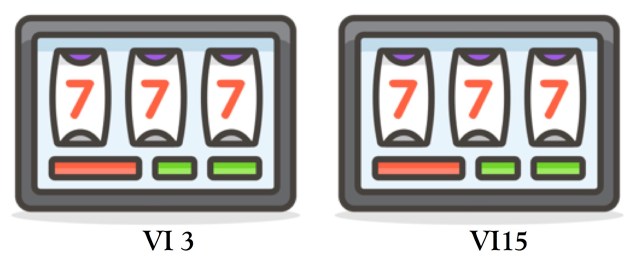

Imagine you are playing on something like a slot machine, except that it is guaranteed to pay out approximately (not exactly) every 3 minutes, no matter how many times you pull the handle, with the stipulation that you must pull the handle once after it is “ready,” for it to pay off. This is an interval schedule. (In real life, slot machines are on ratio schedules, that is, their payoffs depend on the number of times the levers are pulled and are controlled by complex algorithms that are regulated by law.) The schedule of our interval-based machine would be called VI3, the time units being minutes. When you first start playing with it, you don’t know about the schedule.

There is another machine right next to it. It pays out about every 15 minutes, VI15, although again you don’t know this. Let’s say that each payout for each machine is $1,000 and you can play all day. No one else is playing.

It won’t take you long to realize that Machine 1 pays out a lot more often. But every once in a while, Machine 2 pays out, too. Pretty soon you’ll start to realize this about the machines’ schedules. You’ll gravitate to Machine 1. But no matter how many times you pull the lever, it won’t pay out more often than every few minutes. There is a downtime. Also, you’ll find out that you can’t just let the payouts “accrue.” If you miss pulling the lever during one period where a payout is available, you miss out on that reinforcement.

So you will continue to modify your behavior. You won’t bother to pull the lever for a while after Machine 1 pays off. It rarely pays again that fast. You’ve got time on your hands. What do you do? Go over to Machine 2 and pull that lever. Every 15 minutes or so, that gets you a payout as well. As long as there is no penalty for switching and no other confounding factors, this will pay off. You can “double dip.” Then you go back to Machine 1 so you won’t miss your opportunity, and start pulling the lever again.

After you have spent some time with the machines, and if there are no complications, your rate of pulling levers will probably approach the prediction of the matching law. If r1 is once per three minutes (1/3) and r2 is once per 15 minutes (1/15), r1/r2 = 5. That predicts that you will pull the lever on Machine 1 approximately 5 times for every pull on the lever of Machine 2.

What About Ratio Schedules?

Some people say the matching law doesn’t apply to ratio schedules. But it does. It’s just dead simple. If there are two possible behaviors concurrently available, both on ratio schedules, with the same value of reinforcer, the best strategy is to note which behavior is on a denser schedule and keep performing it exclusively. If you are playing a ratio schedule slot machine that pays off about every 5th time, you have nothing to gain by running over and playing one that pays off about every 10th time. In the time it takes for you to go pull the other lever multiple times, you could have been getting more money for fewer pulls. So you find the best payoff and stay put.

To review the different strategies, let’s let some experts explain it. Paul Chance summarizes the different successful approaches with ratio vs. interval schedules:

In the case of a choice among ratio schedules, the matching law correctly predicts choosing the schedule with the highest reinforcement frequency. In the case of a choice among interval schedules, the matching law predicts working on each schedule in proportion to the amount of reinforcers available on each. —(Chance, 2003)

Michael Domjan lists some situations in which there are complications when computing the matching law:

Departures from matching occur if the response alternatives require different degrees of effort, if different reinforcers are used for each response alternative, or if switching from one response to the other is made more difficult.— (Domjan, 2000)

Life Is Not a Lab

There’s a reason psychologists perform their experiments in enclosures such as Skinner boxes, where there are very few visual, auditory, and other distractions. It is to minimize the competing reinforcers: positive and negative. Let’s say our little experiment with the machines is in the real world, though. There are a thousand reasons that your behavior might not exactly follow the simple version of the matching law formula for two possible reinforcers. Someone might be smoking next to Machine 1 and it makes you cough. There may be a glare on the screen of Machine 2 and you get a headache every time you go over there. Maybe you are left-handed and one of the machines is more comfortable for you to play. Heck, you may need to leave to go to the bathroom. But you know what? The complications added by these other stimuli don’t “disprove” the matching law. They just force you to add more terms to the equation. In this example, most of the competing stimuli would lead to behaviors subject to negative reinforcement. Yep, the matching law works for negative reinforcement as well.

The matching law applies to reinforcer value, too. If the schedules were the same as described above but Machine 1 paid out only $50 and Machine 2 paid out $1,000, you might still pull the lever for Machine 1 more times. But you would be intent on not missing the opportunity for Machine 2. The ratio would skew towards Machine 2 as you pulled its lever more often.

In the real world, there are always multiple reinforcers available and they are all on different but concurrent schedules. And concurrent schedules—in the lab and in life—are where we see the effects of the matching law. And they are why the matching law can bite us in the butt, time and again in training. How about with loose leash walking? The outside world is full of potential reinforcers on concurrent schedules. On the one hand, there is your own schedule of reinforcement for loose leash walking, hopefully a generous one. Because you are competing with things like the ever-present interesting odors on the ground. Things like fire hydrants and favorite bushes probably pay off 100% of the time. Birds and squirrels are often available to sniff after and try to chase. What does this predict for your dog’s behavior when she is surrounded by all those rich reinforcement schedules?

When our dogs stray from the activities that we may prefer for them, they are doing what comes naturally to any organism. They are “shopping around” for the best deal. It correlates with survival.

That “shopping around” is codified in the matching law. Given multiple behaviors on different schedules, the animal will learn the likelihood of payoffs for all of them and adjust its behavior accordingly. That includes that we trainers can adjust our behavior—specifically our reinforcement schedules—so the matching law doesn’t make mincemeat of our training.

Helpful Resources

For further reading:

- The Matching Law: A Tutorial for Practitioners

- Choice, matching, and human behavior: A review of the literature.

References

Baum, W. M. (1974). On two types of deviation from the matching law: Bias and undermatching. Journal of the experimental analysis of behavior, 22(1), 231-242. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1333261/pdf/jeabehav00117-0229.pdf

Chance, P. (2003). Learning and behavior. Wadsworth.

Domjan, M. (2000). The essentials of conditioning and learning. Wadsworth/Thomson Learning.

Herrnstein, R. J. (1961). Relative and absolute strength of response as a function of frequency of reinforcement. Journal of the experimental analysis of behavior, 4(3), 267-272.

Thorndike, Edward L (1911) Animal intelligence: Experimental Studies. Macmillan.

Photo Credits

Cookies photo courtesy of Sarah Fleming via Wikimedia Commons

By Sarah Fleming (originally posted to Flickr as Oven) [CC BY 2.0 (https://creativecommons.org/licenses/by/2.0)], via Wikimedia Commons

Woodpecker photo courtesy of JJ Harrison via Wikimedia Commons

By JJ Harrison ([email protected]) [CC BY-SA 3.0 (https://creativecommons.org/licenses/by-sa/3.0)], from Wikimedia Commons

Tiger photo courtesy of SushG via Wikimedia Commons

By SushG [CC BY-SA 4.0 (https://creativecommons.org/licenses/by-sa/4.0)], from Wikimedia Commons

Slot machine photo adapted from Vincent Le Moign via Wikimedia Commons

Vincent Le Moign [CC BY 4.0 (https://creativecommons.org/licenses/by/4.0)], via Wikimedia Commons

Copyright 2018 Eileen Anderson